By:

Steven Andersen

Product Marketing Leader Precision Instruments

Published on:

October 14th, 2024

Subscribe now and get the latest blog posts delivered straight to your inbox.

Three Components of Pressure Calibration for Critical Process Instruments

By:

Steven Andersen

Product Marketing Leader Precision Instruments

Published on:

October 14th, 2024

This article was originally published on October 2, 2023, and updated on October 14, 2024.

The number of industries that rely on pressure measurement equipment is expansive. The list includes manufacturing, water/wastewater, hydraulics, food and beverage, medical, and pharmaceutical, among others. If you are new to any of these markets, you need to understand the importance of monitoring and maintaining appropriate levels of pressure in the critical processes in your industry. Accurate measurement of pressure in these processes is essential for ensuring safety, control and process efficiency. However, achieving these goals requires periodic instrument calibration.

With more than 12 years of specialization in precision instruments, I have witnessed first-hand the consequences of neglecting calibration. As a follow-up to my blog titled, How to Check the Calibration of a Pressure Gauge, this article examines in more detail the circumstances that necessitate this important process, focuses on the three most critical factors of calibration and discusses the considerations associated with each factor.

When you finish reading, you will have a solid foundation of knowledge regarding pressure instrument calibration and be ready to dig deeper into the topic with the additional resources provided for your reference.

What is pressure calibration?

Verifying the accuracy of instrumentation and adjusting to improve instrument accuracy is what calibration is all about. Calibration ensures that the sensor continues to provide precise measurements using the following three factors.

- Accuracy refers to the proximity or deviation of a set of measurements (observations or readings) from the standard to which they are being compared. In the context of pressure gauges, accuracy is usually quantified as a percentage of the instrument span.

- Tolerance represents the maximum allowable deviation from a specified value. It can be expressed in measurement units. For example, a gauge with a 100 psi (pounds per square inch) range and an accuracy of ±1% of the span will have a tolerance of ±1.0 psi at any point of the measurement scale.

- Precision refers to how close or dispersed the measurements are to each other and their repeatability. If you look at a pressure sensor used in an industrial process, the instrument is calibrated to measure pressure in a specific range, say 0 to 1000 psi, and it has a high level of precision. To test the precision of the sensor, you subject it to various pressure conditions within its range and record the measurements.

Each of these factors is considered in all aspects of the calibration process.

Figure 1: Seven reasons pressure calibration may be needed

How accuracy is measured in the pressure calibration process.

When setting up pressure measuring equipment, it's crucial to make sure the standard pressure reference you're using is very precise. According to American Society of Mechanical Engineers (ASME) guidelines, this reference should be at least four times more accurate than the device you're testing. This is often referred to as a 4 to 1 ratio (4:1).

For example, if you have a gauge that measures 0-100 pounds per square inch (psi) and it's accurate within ±1% of its span (meaning it's within ±1.0 psi), you should calibrate it using a reference standard that can measure within ±0.25 psi or less.

Primary vs. Secondary Standards

In the world of measuring things, there are primary standards and secondary standards.

A primary standard is an instrument whose calibration is calculated from the knowledge of its significant dimensions and physical constants and is traceable to a country’s national metrology institute, such as the National Institute of Standards and Technology (NIST), USA.

A deadweight tester is an example of a primary standard. It measures pressure through the direct application of “force over area” with the use of pistons and weights. This is a valuable calibration tool to validate the measurement accuracy of secondary standards such as digital or dial-mechanical gauges.

Figure 2: Primary Standard - Deadweight Tester

A secondary (aka reference) standard is a device such as a digital gauge or handheld calibrator which is typically validated by a primary standard.

A digital gauge or handheld calibrator is an example of a secondary device that is typically validated by a primary standard.

Figure 3: Secondary Standard – Digital Pressure Gauge

Figure 4: Secondary Standard – Handheld Calibrator

What conditions affect pressure calibration accuracy, tolerance and precision?

When you're checking how well a measuring tool is working, it's important to consider the conditions where your calibration is taking place. While most instrument calibration is performed under ideal conditions, like an indoor temperature-controlled space, testing in less-than-favorable environments can affect your readings and outcomes.

For example, you need to consider things like extreme temperature, air pressure, vibrations, humidity, or even electromagnetic interference, which can all affect the instrument’s accuracy, tolerance and precision. Unless these tools are tested in perfect conditions, you may get errors in the measurements.

Depending on these factors, your results may vary. Ideally, everything aligns and the outcome of calibration is both precise and accurate.

Figure 5: Precision vs. Accuracy

How many test points do you need for pressure calibration?

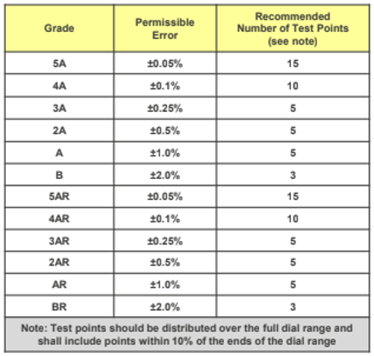

Another important factor to think about with pressure instrument calibration is the number of test points you need to check the full range of the instrument. The better the instrument's accuracy, the more test points you should examine.

There are guidelines, like those from ASME, that provide recommendations for this. For instance, if you have a gauge that's accurate within 1% to 0.25% of its full range, they suggest taking 10 measurements: 5 as you increase pressure and 5 as you decrease it, each at 20% intervals of the full range. However, if you're dealing with a super precise gauge, accurate within 0.1% of the full range, it's better to take ten measurements as you go up and ten as you go down.

Figure 6: Recommended Test Points for Pressure Calibration

Remember, different industries have their own requirements and needs for checking and adjusting instruments. Whoever is conducting the calibration will need to follow local and industry standards.

Here’s another example, some industries require you to verify a gauge's accuracy before making any changes (these are known as "as received" or "as found" readings). It's important to confirm that the gauge meets the specs when it is taken out of service for recalibration. If it isn't within spec at that point, you might need to consider how its inaccuracy may have affected your processes while it was in use.

There’s so much more to learn about pressure calibration!

Now that you have a basic understanding of pressure calibration and the primary factors to consider when performing your calibration such as accuracy, tolerance and precision, are you ready to dive in a little deeper into this subject matter? If the answer is “yes” then here are a few other articles we recommend:

- How to Check the Calibration of a Pressure Gauge.

- How Often Should I Check the Calibration of My Pressure Gauge?

- What is Thermocouple Calibration (and What are its Benefits?

- Why and How to Perform a Thermocouple Calibration.

Also, you can talk to one of our industry experts and get all your pressure instrument questions answered. In the meantime, download our guide on Accuracy Statements.

Steven Andersen, Product Marketing Leader Precision Instruments

Steven Andersen has worked at Ashcroft for 13 years in Product Management and Product Leader positions in the Precision Instruments group. He has over 30 years of experience in industrial instrumentation. In his free time, he enjoys seeing live music, boating, camping and fishing.